Barebones AI Guide: Your First Steps for Faster Development

Alex Garrett-Smith •1d

AI is moving at such a rapid pace that if you’ve not yet started to integrate it into your daily workflow, it’s difficult to know where to start. The good news is that there are plenty of small steps you can take to dramatically boost your productivity.

You don’t need to adopt an entirely new toolchain, redesign how you work, or hand over critical decisions to an AI. In fact, the most useful gains often come from using AI in the boring, repetitive parts of development (the bits you already know how to do) but don’t particularly enjoy doing over and over again.

Will AI Really Speed up My Development?

There’s a common misconception that using AI will generate garbage and cause more headaches in the future. If you’re already a developer and deeply understand what’s actually happening, AI can absolutely speed you up.

The best part? You don’t need to go all-in with agents working 24/7 to reap the benefits of AI-assisted development. Why not use it to save time on the repetitive, boring stuff and focus on what you enjoy? Or use it to write commit messages, help you understand bugs, or figure out the best way to approach a problem?

My goal here is to ease you into using AI for a few aspects of your daily workflow.

What Tools Do I Need?

Because there are so many IDE options, services, and agents, I’ll try to keep this as neutral as possible. At the time of writing, Cursor (an IDE) is probably the best option to get started with AI-driven development due to its simplicity. Plus, if you’ve used VSCode before, you’ll be pleased to know Cursor is a fork of VSCode, so you’ll feel right at home.

Aside from an IDE, you’ll want to be signed up to a web-based service like ChatGPT or Claude. These are helpful for planning, asking general questions, and as we’ll see shortly, a great first step to fixing bugs.

You can also make use of tools like Claude Code which either integrate into your IDE or can run on the command line. I’ll make use of a variety of these to demonstrate how they can be used.

Rubber-Ducking Bugs

There are plenty of ways to detect, explain, and automatically fix bugs with AI, but let’s start with the easiest option, copying and pasting an error!

The following function converts a string to title case, but there’s a hidden bug here I’ve added on purpose.

function toTitleCase(sentence) {

return sentence

.split(' ')

.map(word => {

if (word.length === 0) return '';

return word[0].toUpperCase() + word.slice(1).toLowerCase;

})

.join(' ');

}

I’ve written a simple test so we can see this fail:

test("toTitleCase converts a sentence to title case", () => {

expect(toTitleCase("the cat sat on the mat")).toBe("The Cat Sat On The Mat");

});

Here’s the error we get back:

toTitleCase converts a sentence to title case

expect(received).toBe(expected) // Object.is equality

Expected: "The Cat Sat On The Mat"

Received: "Tfunction toLowerCase() { [native code] } Cfunction toLowerCase() { [native code] } Sfunction toLowerCase() { [native code] } Ofunction toLowerCase() { [native code] } Tfunction toLowerCase() { [native code] } Mfunction toLowerCase() { [native code] }"

Let’s paste the function directly into Claude (or ChatGPT, or your preferred web-based assistant) and ask what’s wrong with it. It’s also a good idea to paste the error/failing test so it has some more context.

Here’s what Claude comes back with:

Output of Claude (Web) with a fix for the toTitleCase bug.

This makes sense! We can update the code and our tests should pass.

This was a fairly simple error to fix, and if it’s something more in-depth, you can continue the chat with Claude/ChatGPT, giving it more information as you receive it. You’ll be surprised at how quickly it can spot things you haven’t.

Turning an Idea Into a Concrete First Step

Ever known exactly what you want to build, but you’ve frozen and can’t take the first step? AI can help unfreeze you and begin to break down an idea or feature into manageable first steps, work out the best language or framework to use, decide on packages to pull in, and more.

I’m not talking about full acceptance criteria and architecture planning (although it can do that). Instead, just start by using AI to chat, like you would a colleague.

Imagine we’re tasked with building a stepper component with Vue.js, where users can navigate through multiple steps, input data, and submit the data in the final step. Our ticket for this task is super vague and we’ve never done this before.

Task: We need a stepper component in Vue.js that allows our users to enter simple data in each step (there could be multiple steps) and then submit this data in the final step.

I’m stuck. A bunch of questions come to mind. Does this need to be reusable? Does the data need to be shown in the last step? What happens if the user refreshes their browser?

The first thing I’m going to do is paste this into Claude (Web) and ask it to generate a bunch of clarifying questions. That not only helps us as developers, but it also allows us to clarify directly with whoever is requesting this feature.

Here’s my prompt:

**Me:**

Could you generate some questions around the scope of this feature request? Keep the questions to the most important 3 questions that will allow me to get started building this.

"We need a stepper component in Vue.js that allows our users to enter simple data in each step (there could be multiple steps) and then submit this data in the final step."

And here’s the response from Claude:

**Claude:**

Here are the 3 most important questions to get started building this stepper component:

1. What data validation and navigation rules do you need? Should users be able to skip ahead to future steps, or must they complete each step in order? Do you need validation to prevent moving forward with incomplete/invalid data, and should users be able to go back and edit previous steps?

2. How should the final submission work? When the user clicks submit on the final step, should all the collected data from previous steps be sent as a single payload? Do you need any confirmation UI, loading states, or error handling for the submission process?

3. What visual/UX requirements do you have for the stepper? Do you need a progress indicator at the top showing all steps (like numbered circles or a progress bar)?

These questions cover the core architectural decisions (navigation flow, data handling, and presentation) that will significantly impact how you structure the component from the start.

This gives me a great start. I hadn’t even thought about the visual requirements.

Once I have more concrete answers to these questions, I can follow up and ask it to generate the best way forward.

**Me:**

Data is very simple (all text/number based) and users should be able to navigate back and forward between steps. There is no requirement to validate before moving on. The stepper should persist data between steps, but if the user refreshes we're fine with them losing data (for now). Visuals should show basic step numbers at the top of the component. Once we're at the final step, all data is sent within the same payload to a pre-defined API endpoint.

What's the best way to get started on this?

And here’s Claude’s response:

**Claude:**

For this Vue.js stepper component, I recommend Vue Form Wizard (vue3-form-wizard).

It handles:

- Multi-step navigation with back/forward

- Data persistence between steps

- Built-in step indicators

- Easy customization

Install: npm install vue3-form-wizard

Alternative if you want something lighter: Build a simple custom component (takes ~30-50 lines of Vue code for your use case).

Actually, this is perfect, and it looks like vue-3-form-wizard covers everything I need for now. I’ve gone from “I don’t know how to approach this” to some practical first steps. And if they don’t work out (or I need further help), back to Claude!

Note that this interaction is very contrived. In reality, I’d chat more with Claude, narrowing down specific parts by asking clarifying questions.

This kind of back-and-forth, refining scope, asking better questions, and narrowing options is exactly the skill AIDD courses are designed to teach in a more structured way.

Writing Boring Code You Already Understand

A lot of code we write daily has been written thousands of times before. The same predictable patterns crop up in almost every project. Why write them every time?

Here are some examples:

Form validation and error messages

Basic tests and test setup

Date and time handling

Defining the basic structure of components, classes, etc.

I could go on. All of these small, hand-written bits of code add up to a lot of wasted time when you could be focusing on better things. There’s almost no risk in letting AI generate these tiny, boilerplate things for you.

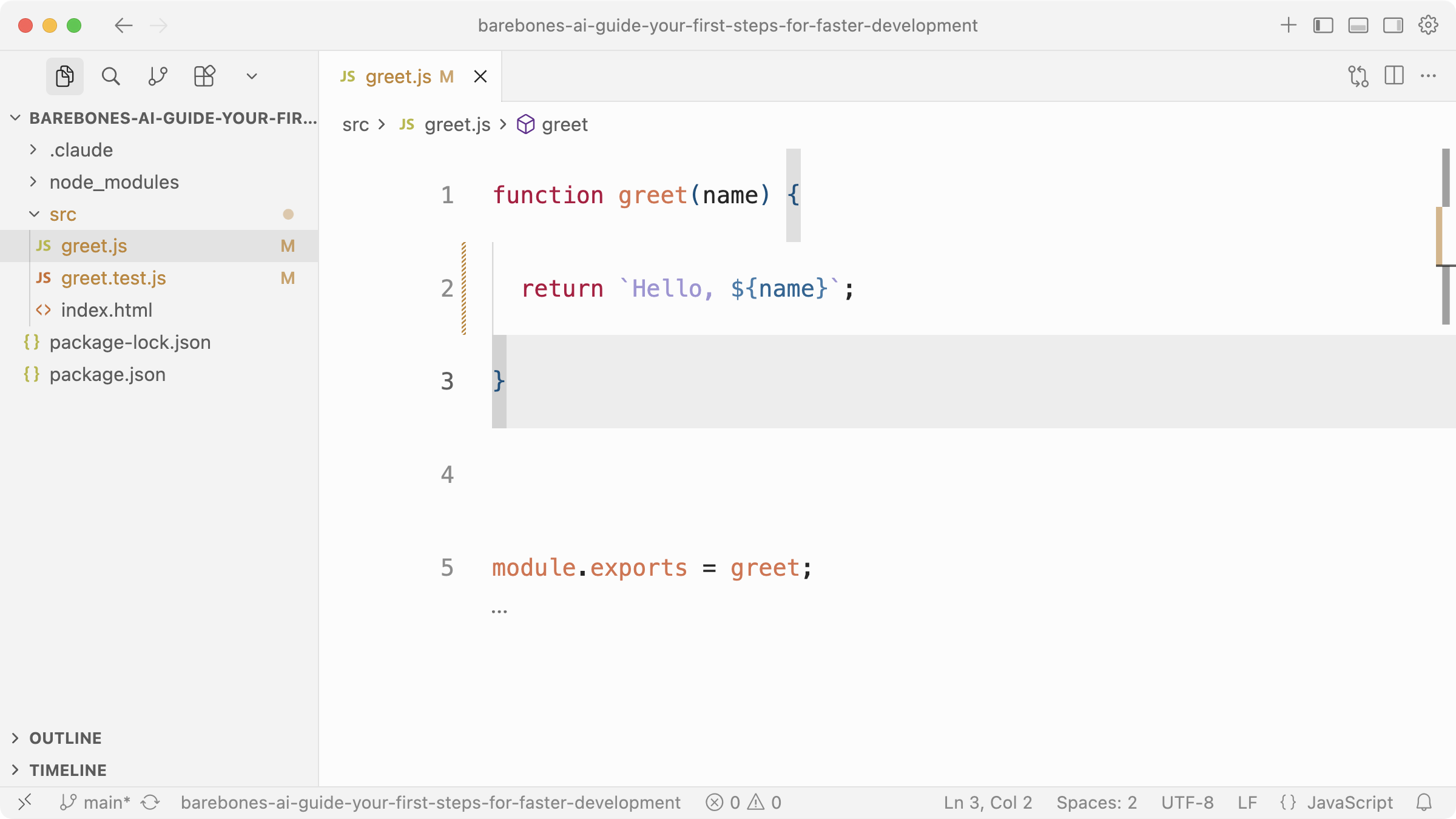

Let’s look at an example. I’ve created a simple JavaScript function within a buildable project, using Jest for tests (yes, I used AI to scaffold the project 😎). I have this open in Cursor.

The simple JavaScript function within our project, open in Cursor.

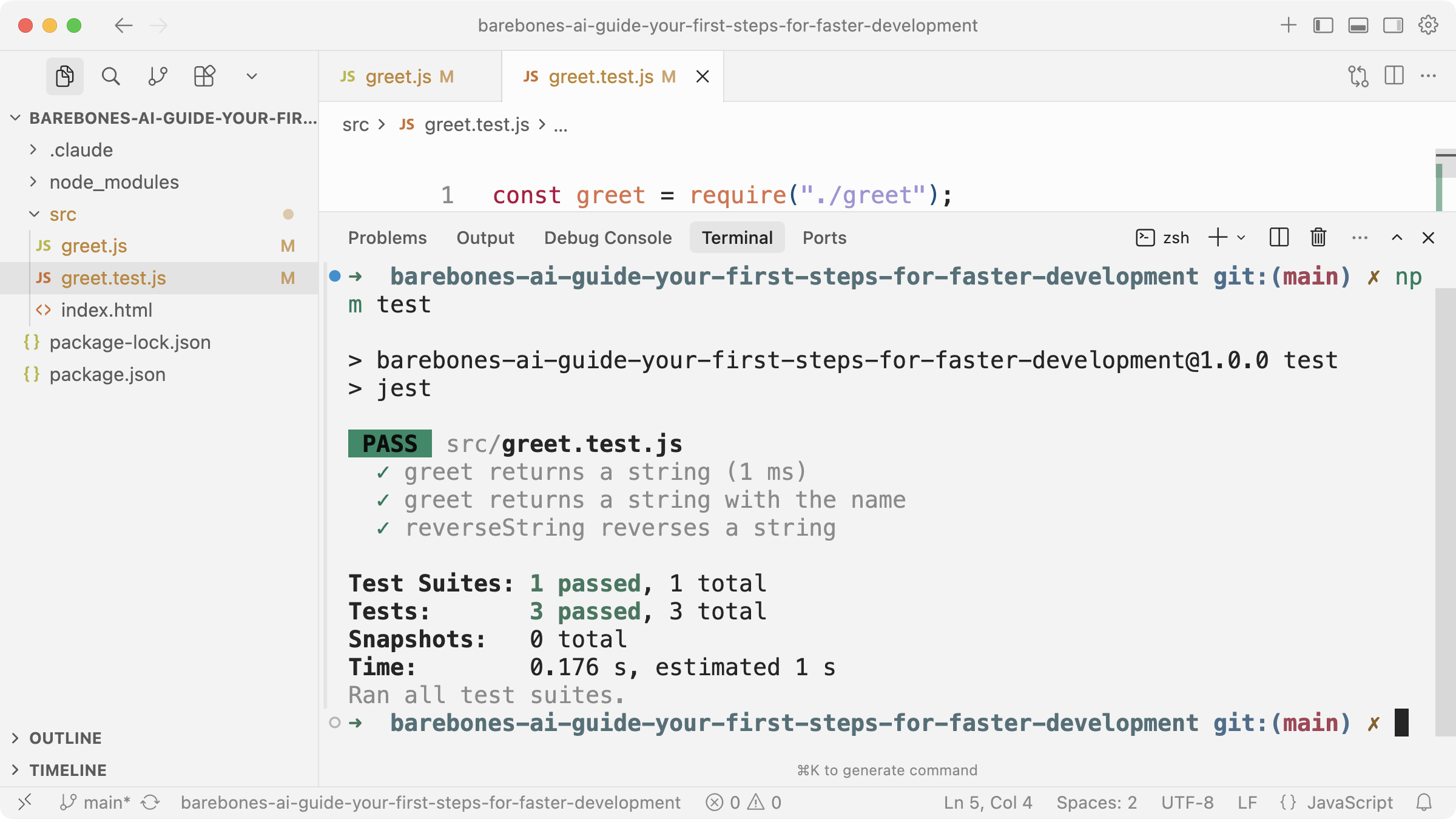

If I head over to the test file and hit tab a bunch of times, look what happens:

The automatic generation of tests by hitting the tab key repeatedly to accept each suggestion.

And it just so happens that when I run my tests, everything passes!

Showing that the tests suggested by Cursor are passing.

Cursor has context around my project and the greet.js import, so it understood exactly what to generate.

For more complex functionality, you may just want boilerplate generated, tweak it as you need, and repeat. As a start, small slices of code generation like this add up and save you huge amounts of time. Plus, you’ll get faster as you practice.

Oh and don’t worry, if you’re not using Cursor, you can also use almost any other AI-enabled IDE to do this.

Explaining Unfamiliar Code

Whether you’ve jumped into a brand new codebase or can’t remember what you wrote last week, AI can help explain either snippets of code or entire codebases.

Without even opening an IDE, I’m going to use Claude Code on the command-line to specifically ask how authentication works in a project I have on my local machine.

**Me:**

Can you explain how authentication works in the someproject/ project?

After around 2 minutes and 30 seconds, Claude Code comes back with a fairly detailed overview, giving me an idea of the authentication flows, where things happen, and where things are configured.

.Overview

Someproject uses Laravel Fortify for session-based authentication with Inertia.js on the frontend. It supports email/password login, two-factor authentication, and role-based access control.

Login & Registration Flow

Login: app/Http/Controllers/Auth/LoginIndexController.php renders an Inertia modal at /auth/signin. Fortify handles the actual POST /login with a custom pipeline.

Registration: app/Http/Controllers/Auth/RegisterIndexController.php at /auth/register. User creation happens in app/Actions/Fortify/CreateNewUser.php:29-50 — validates name, email, and password, then dispatches a SendRegisterWelcomeEmail job

Rate limiting is configured in app/Providers/FortifyServiceProvider.php:76-82: 20 login attempts/min per email+IP, 5 two-factor attempts/min per session.

Password Reset

Standard Fortify flow with custom Inertia views:

- app/Http/Controllers/Auth/PasswordRecoverController.php — request form

- app/Http/Controllers/Auth/PasswordResetController.php — reset form with token

- Tokens expire after 60 minutes (config/auth.php:99)

Security Features

- 2FA — TOTP with recovery codes

- CSRF — standard Laravel with webhook exclusions

- Password policy — Fortify’s Password rule for complexity

- Password confirmation timeout — 3 hours (config/auth.php:115)

[and more]

Doing this kind of thing can use up a lot of tokens, costing you more quota and money. In my opinion it’s worth it to get an overview of a project in the context of what you’re working on.

Of course, you can use this same technique to explain individual functions, single classes or tiny snippets of code which is much faster, cheaper and can be done as you’re browsing source code.

Micro-Refactors

If you have tests in place and they’re passing, you might want to refactor. And why not hand this off to AI?

Below, I’ve written a purposefully convoluted function that reverses a string, and we’re going to simplify it. I have some tests written behind the scenes which I’ll use to make sure the refactored version still works as intended.

function reverseString(str) {

let arr = [];

for (let i = str.length - 1; i >= 0; i--) {

let ch = str[i];

arr.push(ch);

}

let rev = '';

for (let j = 0; j < arr.length; j++) {

rev = rev + arr[j];

}

return rev;

}

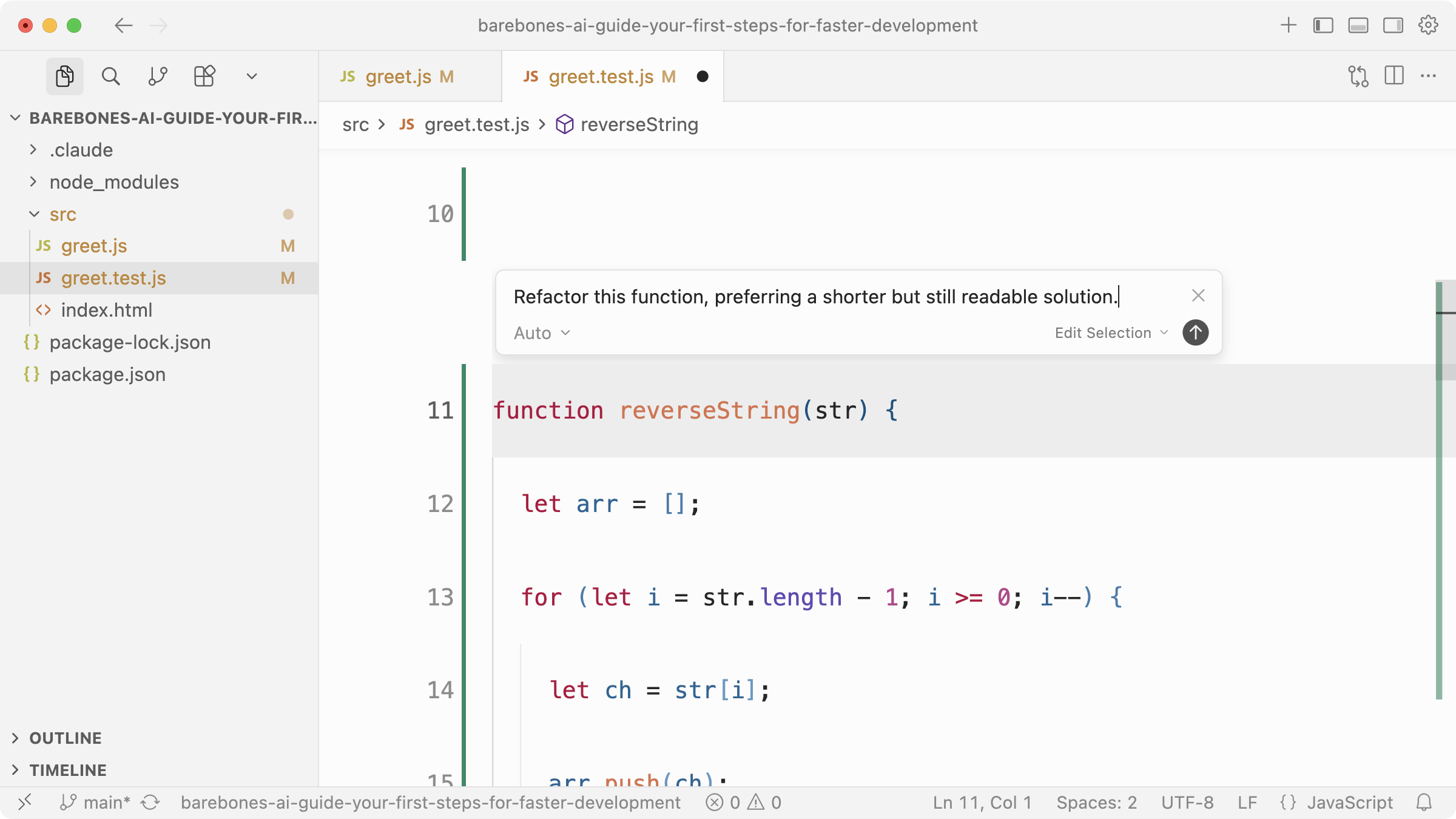

You could paste this directly into Claude/ChatGPT to refactor, but let’s try directly inside Cursor (or whichever AI-enabled IDE you’re using).

An inline refactor prompt within Cursor.

My prompt was “Refactor this function, preferring a shorter but still readable solution” and there’s good reason I included “but still readable solution”. I don’t want to refactor for the sake of making this as short as possible and compromising readability. As you experiment with prompting, you’ll get better at adding modifiers like this.

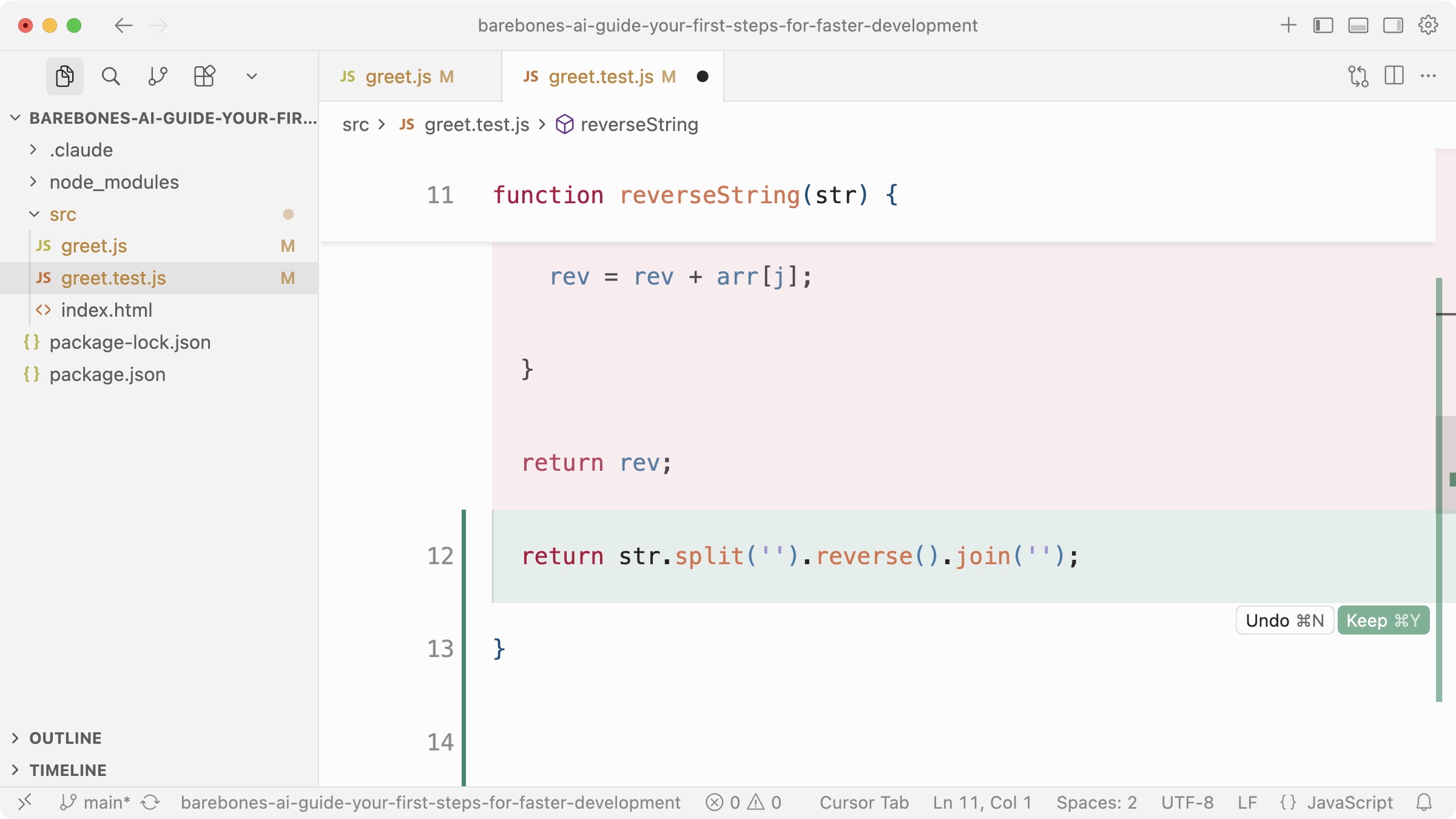

Once we’re done, we end up with the following diff. Nice! We’ll go ahead and accept the changes.

Cursor’s suggested refactor shown as a Git diff, ready to accept the change.

This is a win. We ended up with a shorter, clearer function and as long as our tests pass, I’d say this was a pretty fast, successful refactor.

Bonus: Writing Commit Messages

Let’s face it, once we’ve wrapped up a good chunk of what we’re working on in a branch, most of us struggle to think of a descriptive enough commit message to accurately describe what we’ve done, which can cause headaches while reviewing Git history down the line.

The easiest way to do this is to ask your agent.

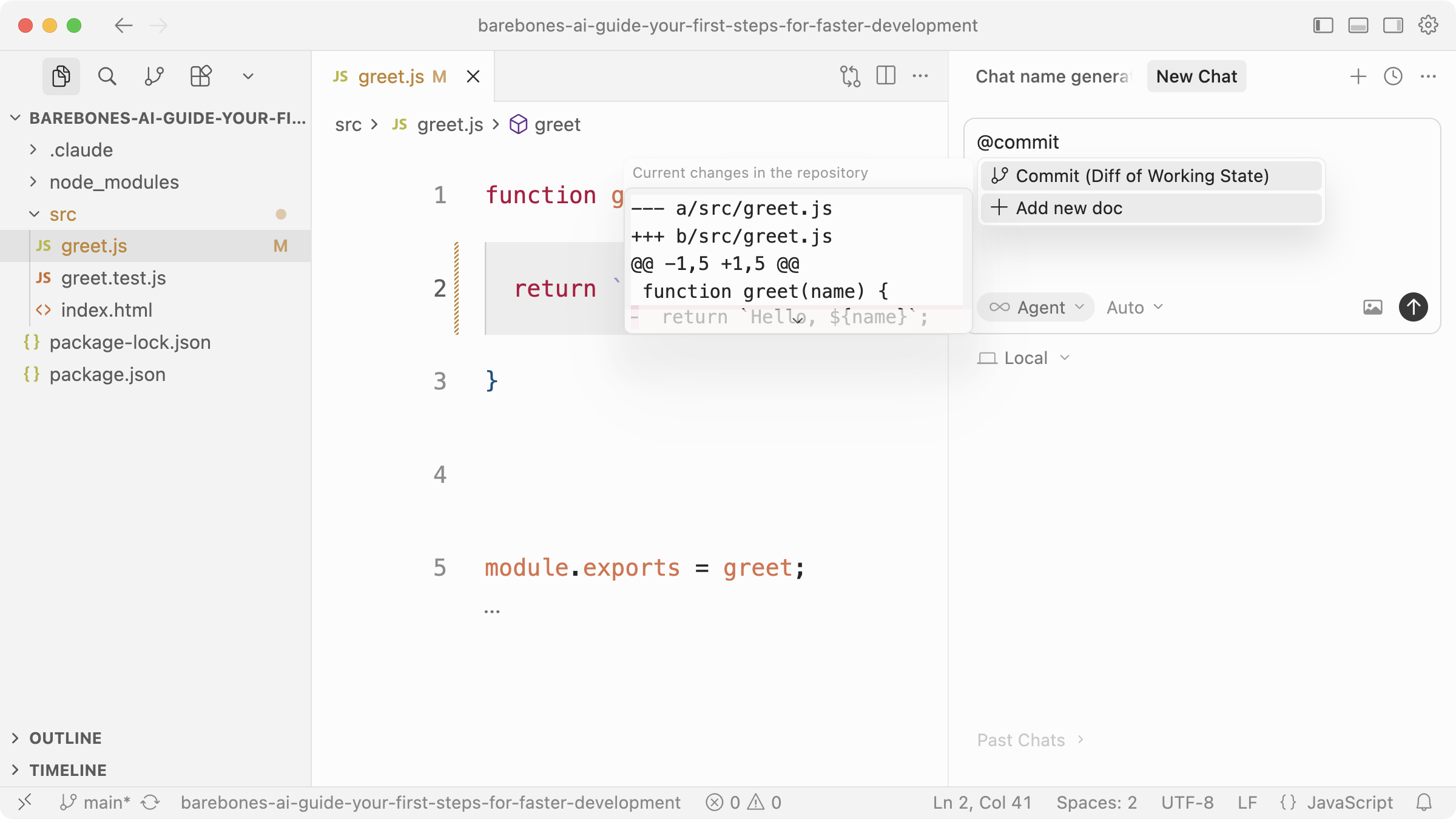

Using Cursor, we’ll use agent mode to commit our changes using @commit.

Automatically running a Git Commit with Cursor in agent mode.

Once we run this, here’s what we get:

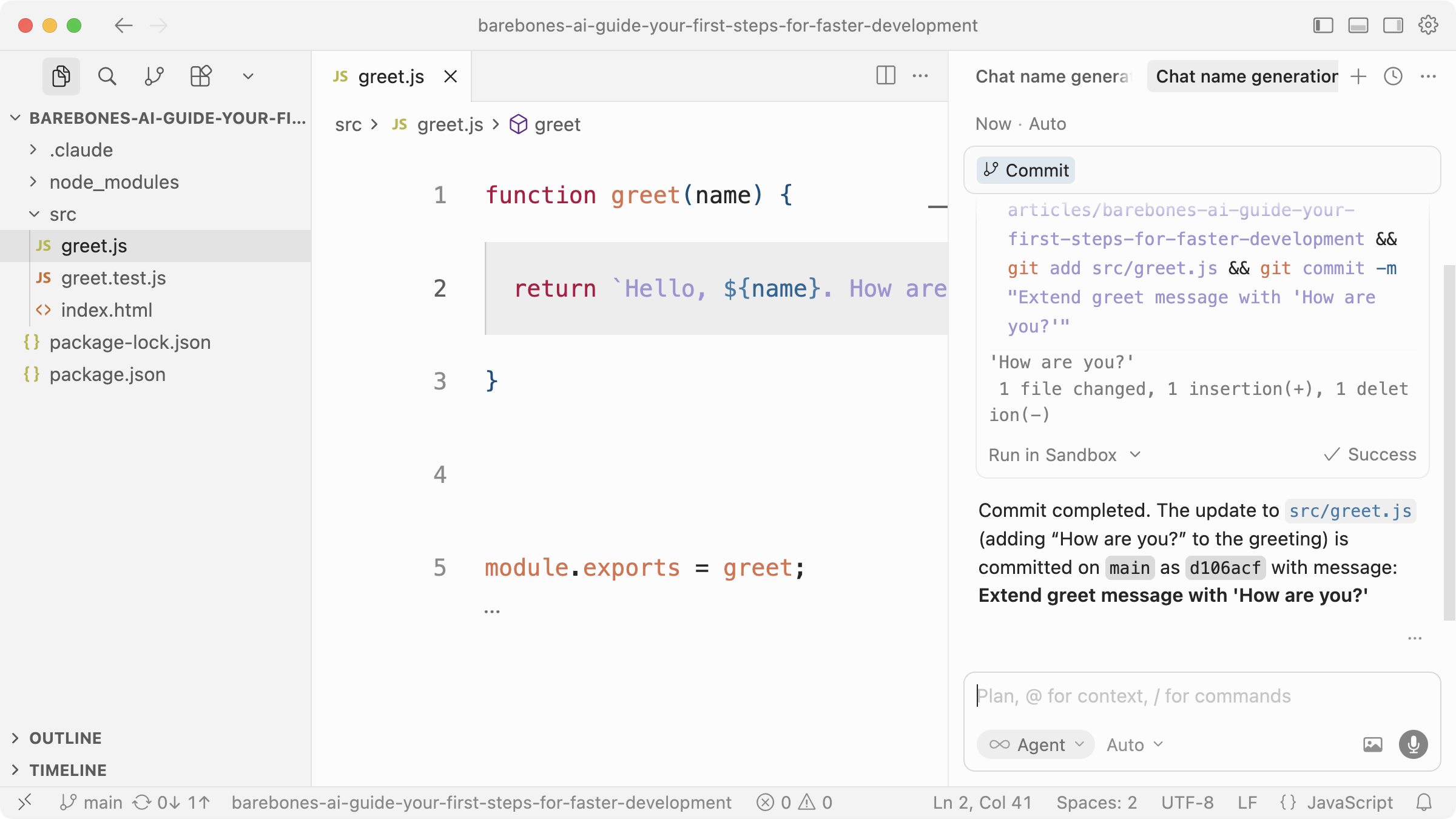

The output demonstrating the Git commit with an automatically generated commit message.

As you can see from the output above, the commit message was set to “Extend greet message with 'How are you?’”. I can now push the change, and I haven’t had to think about the commit message.

If you’re not using Cursor, you can also do this with agents like Claude Code or Codex directly from the command line. Just prompt them to commit the current changes with a message and they’ll infer what the message should be from your Git diff.

Conclusion

I’ve given you an overview of several areas where you can use AI to speed up your development. The trick is to start small (perhaps just a web-based chat) and then start to integrate AI into your everyday workflow (perhaps just starting out with autocomplete).

Using AI for development is just another skill, and you might not be super productive right away. But by taking small steps to generate and automate the boring stuff, you’ll save yourself a huge amount of time.

If you want to go further, AIDD builds on these ideas and helps you stay up to date with how AI is actually being used in development.